Christmas and the new year are getting closer. Time for some of us to step back from our daily activities, take some rest and reflect on the past year. The next issue of this newsletter will be a retrospective on 2015's most popular AI news and resources.

Until then, I wish you Joyful and Happy Holidays!

In the News

Introducing OpenAI

Sam Altman, Elon Musk, Peter Thiel, and others commit time and money (>$1B) to a new non-profit artificial intelligence research lab named OpenAI. This is great news for the AI community as it aims to make all its research public and open.

Google raids threaten Canada's lead in Artificial Intelligence

Canada had an edge in AI but risks loosing it as its star scientists and entrepreneurs are hired away.

Learning

Metadata investigation: inside Hacking Team

Superb analysis carried out on the metadata collected with the leak of the internal email database of the Hacking Team . Shows the depth of information available by analyzing only metadata.

10 Deep Learning Trends at NIPS 2015

Current trends include bigger and deeper nets, growing similarities between NLP and image analysis, cool new areas such as model compression and attention models, and stronger links between neural net research and productization.

Bad data guide

Common mistakes and useful tricks for working with data sets are listed in this nice guide.

Accelerating warehouse operations with neural networks

Some insights by engineers working at Zalando on the optimization of their fashion warehouses - with deep neural networks.

Software tools & code

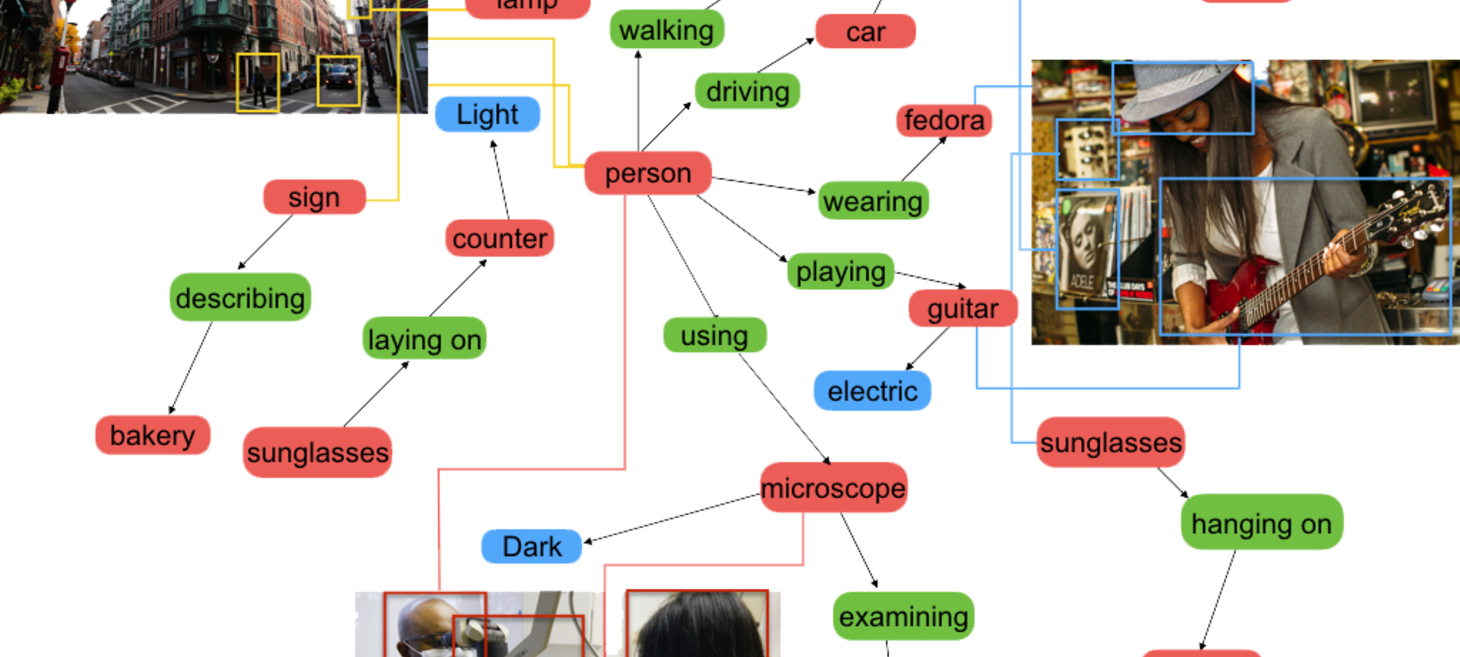

Visual Genome

Stanford releases Visual Genome, an incredible dataset connecting structured language to images. Awesome content for people building object detection / image description / image tagging.

Implementing a CNN for NLP in Tensorflow

Nice tutorial to implement a Convolutional Neural Network for Sentence Classification in Tensorflow.

Tour of Real-World Machine Learning Problems

This is a nice list of the most popular Kaggle and Research machine learning problems.

Hardware

Inside Baidu's GPU clusters

In Machine Learning, Baidu aims to be on par with Google and Facebook. It's been building both it's teams and architecture. Here is a look inside the hardware clusters powering its deep learning efforts.

Some thoughts

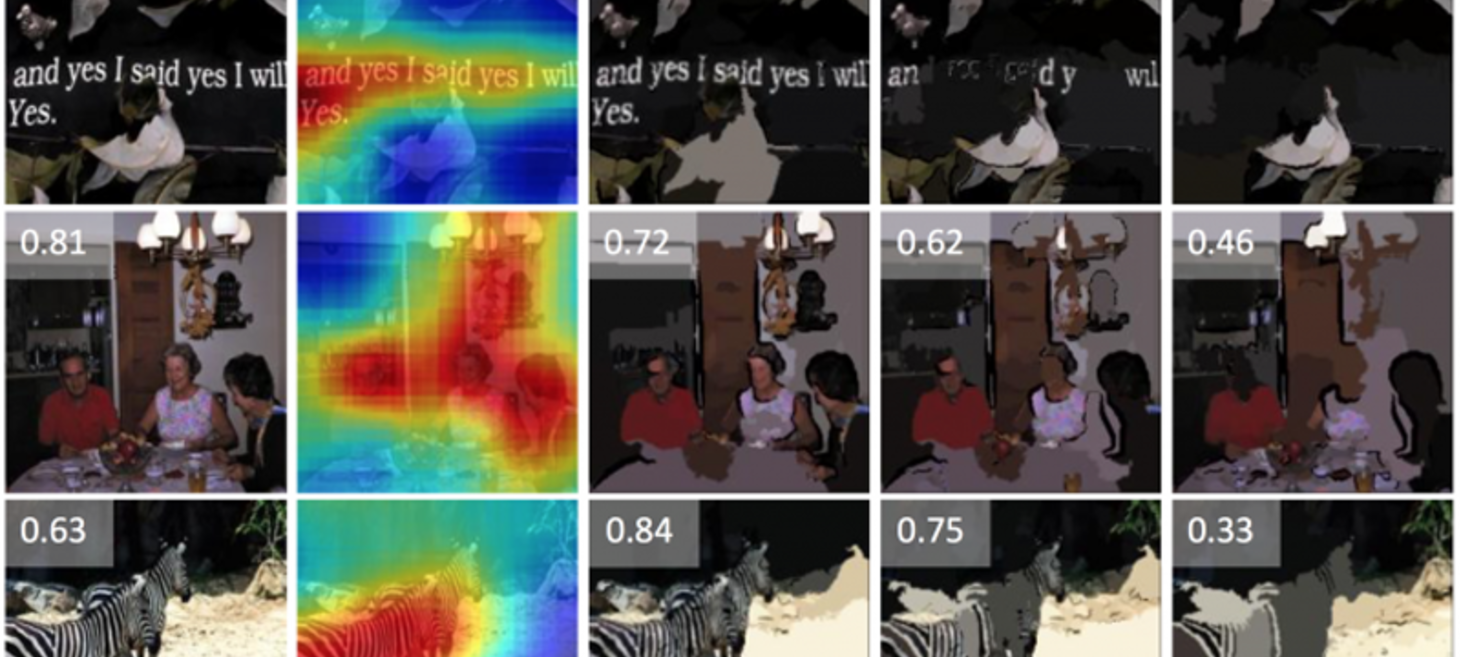

Large-Scale Image Memorability

Tool developed by a team at MIT to determine the most "memorable" part of an image. Fun to play with, and interesting paper describing its workings.

Inside Deep Dreams: how Google made its computers go crazy

Why the neural net project creating wild visions has meaning for art, science, philosophy — and our view of reality

About

This newsletter is a weekly collection of AI news and resources.

If you find it worthwhile, please forward to your friends and colleagues, or share on your favorite network!

Share on Twitter · Share on Linkedin · Share on Google+

Suggestions or comments are more than welcome, just reply to this email. Thanks!